Permutation test against null distribution of respiratory phase angles#

This notebook demonstrates how to make a permutation-based test to determine whether events (e.g., target stimuli) tend to occur at a specific phase of the respiratory cycle. As some phase angles are more common than others (exhalation tend to last longer than inspiration), we compare the observed phase angles at stimulus onset to a null distribution derived from the underlying respiratory signal.

First, we import the necessary Python modules:

[1]:

import pickle

import numpy as np

from pathlib import Path

import math

import matplotlib.pyplot as plt

from pyriodic.circular import Circular

from pyriodic.viz import CircPlot

from pyriodic.permutation import permutation_test_against_null, paired_permutation_test

Single-level analysis#

[2]:

data_path = Path("../../data/respiration/intermediate")

subj_id = "0019"

file_path = data_path / f"participant_{subj_id}_preproc.pkl"

with open(file_path, 'rb') as f:

data = pickle.load(f)

circ = data["circ"]

PA = data["phase_angles"]

print(type(circ), type(PA))

<class 'pyriodic.circular.Circular'> <class 'numpy.ndarray'>

[3]:

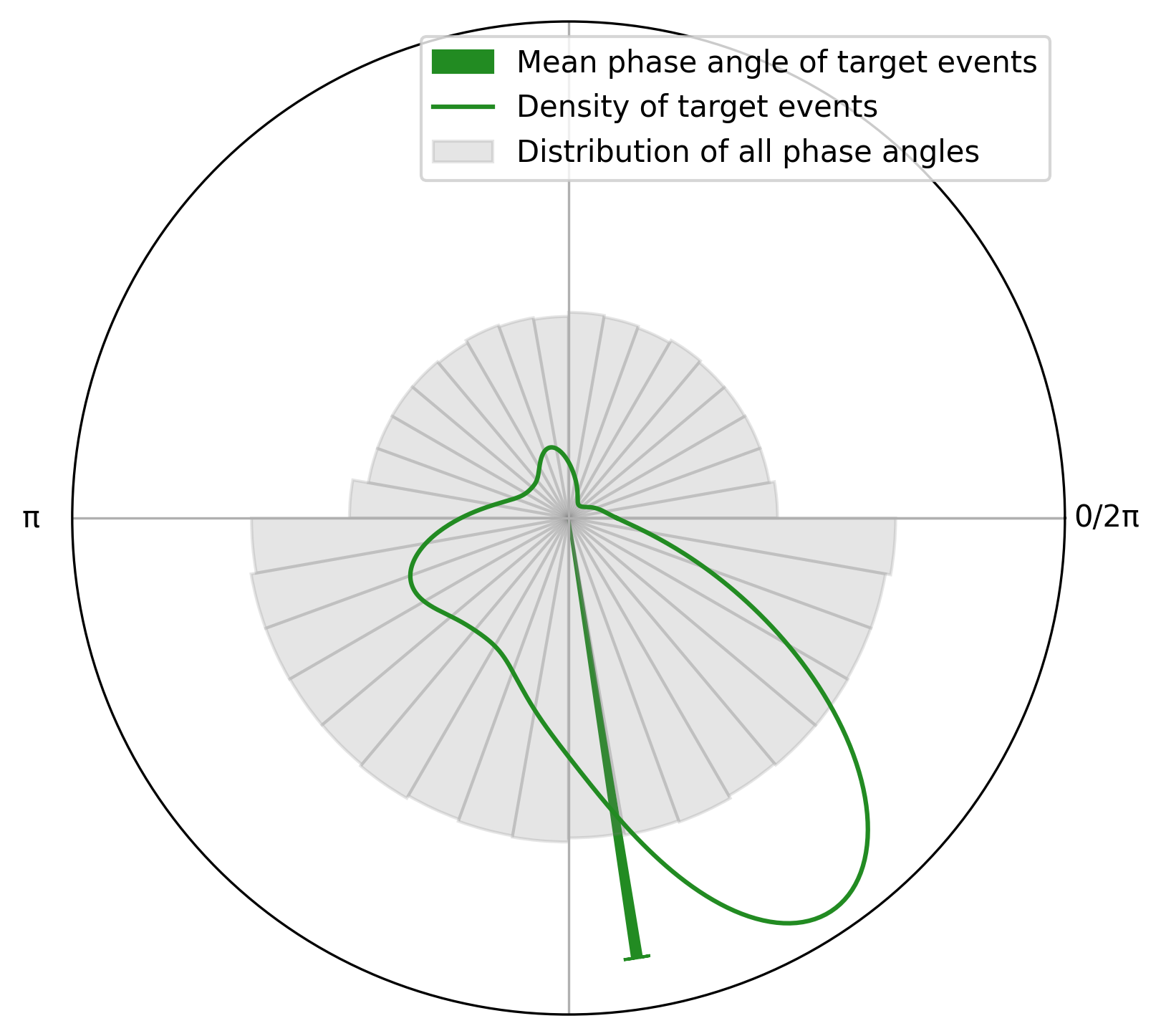

# lets generate a circular object with all the target events combined, and do a quick plot just to get an overview

circ_target = circ["target"]

plot = CircPlot(circ_target, group_by_labels=False)

plot.add_circular_mean(label = "Mean phase angle of target events")

plot.add_histogram(PA, label = "Distribution of all phase angles")

plot.add_density(label = "Density of target events")

plot.add_legend()

To test whether the phase of respiration aligns with the specific events, for example target stimuli, one must account for the fact that respiratory phase is typically not uniformly distributed. For example, expiration may occupy a longer portion of the cycle, making it more likely for events to fall within that phase by chance alone.

To address this, we employ a permutation-based framework in which the observed phase distribution of events is compared to a null distribution constructed from randomly sampled phase angles drawn from the phase angle time series.

Internally, the function:

Generates null samples by randomly drawing phase angles from the participant’s full respiratory phase time series (the phase pool). Each null sample contains the same number of phase angles as observed phase angles. The null samples represent phase distributions expected under the null hypothesis of no event-phase relationship.

For each null sample, a pairwise circular test statistic (by default, Watson’s \(U^2\)) is computed between the observed event-phase distribution and the null sample. This yields a distribution of \textit{obs-vs-null} values, quantifying how different the observed distribution is from randomly drawn respiratory phases.

To model the variability of the test statistic under the null hypothesis, the same \(U^2\) statistic is computed between randomly paired null samples. This produces a distribution of \textit{null-vs-null} \(U^2\) values.

To test whether the \textit{obs-vs-null} \(U^2\) differ systematically from \textit{null-vs-null} \(U^2\), a non-parametric permutation test is conducted. The two distributions are pooled, and their group labels (\textit{obs-vs-null} vs. \textit{null-vs-null}) are randomly permuted. For each permutation, the permutation test statistic, the Mann–Whitney \(U\) statistic, is computed between the two permuted groups. This generates a null distribution of \(U\) values under the null hypothesis of exchangeability between groups. The final p-value is calculated as the proportion of permuted \(U\) statistics greater than or equal to the observed Mann–Whitney \(U\) statistic.

By default a discretised version of Watson’s \(U^2\) statistic is used as the test statistics.

where \(k\) is the number of phase bins, \(F(j)\) is the cumulative proportion up to bin \(j\) in the first sample, and \(G(j)\) is the cumulative proportion in the second sample.

Watson’s \(U^2\) statistic can thus be interpreted as a measure of distributional difference between two circular samples, with larger values indicating greater divergence in their cumulative phase distributions.

In this case, a significant p-value indicates that the distribution of phase angles at which events occur is more different from the underlying respiratory phase distribution than would be expected by chance. Specifically, the observed data deviate more from the background than randomly drawn samples from that same background deviate from each other. Since Watson’s \(U^2\) measures the difference between cumulative distributions, this result suggests that event timing is not randomly aligned with the respiratory cycle, but instead shows a systematic phase dependence.

This is implemented in the permutation_test_against_null function in Pyriodic. It takes a Circular object containing the phase angles of interest (e.g., target stimuli) and a Circular object containing the full respiratory cycle phase angles.

[5]:

# Run permutation test

obs_stat, pval, null_samples = permutation_test_against_null(

circ_target.data,

PA,

n_null = 1000,

n_permutations = 10000,

return_null_samples=True

)

p val: 9.999000099990002e-05, observed stat: 1000000.0:.1f, mean null stat: 500079.0

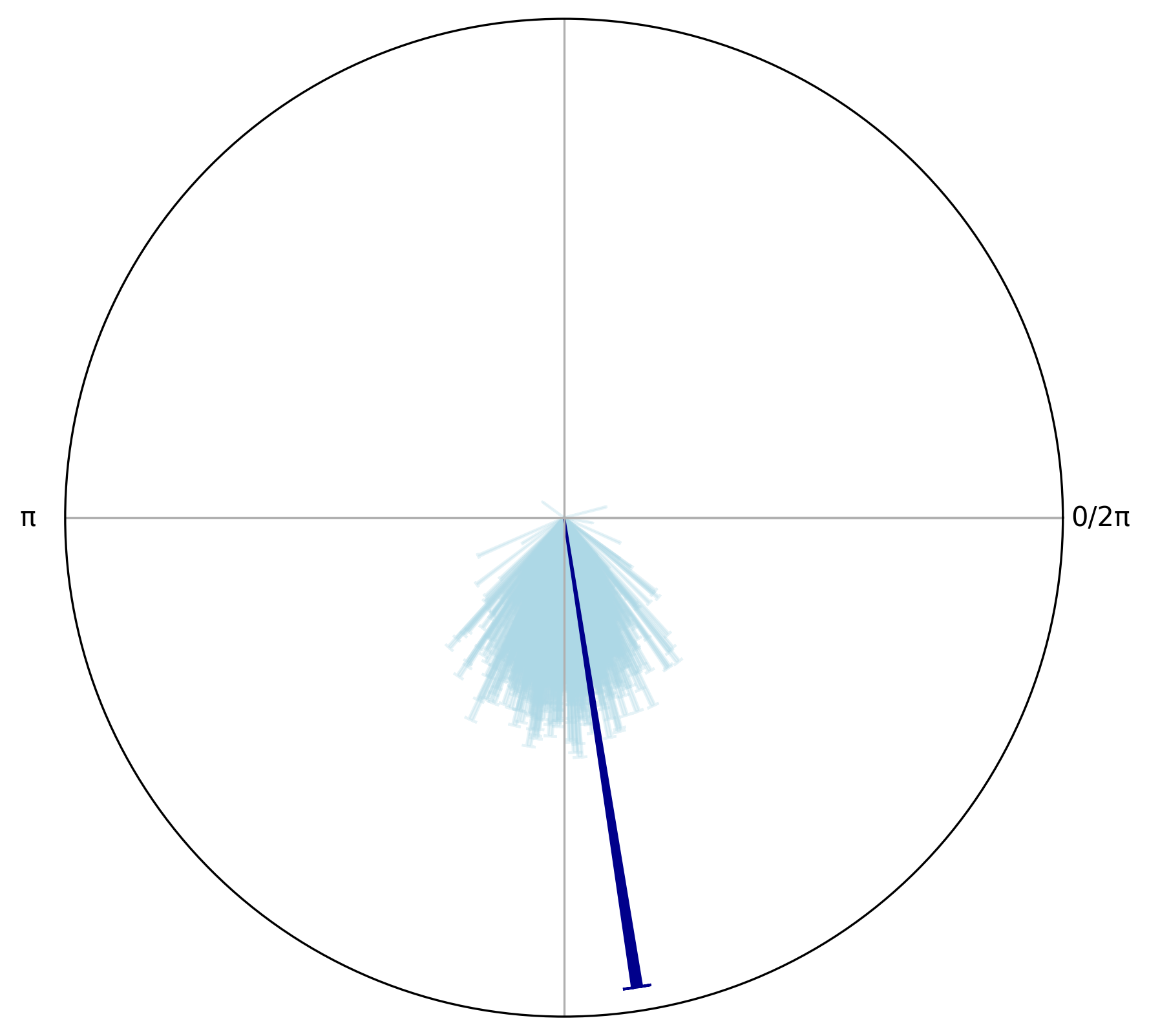

Let’s visually compare the observed phase angles of the target stimuli to a null distribution of phase angles drawn from the full respiratory cycle by plotting the mean resultant vector length and the mean angle for both the observed target phase angles and a sample of null phase angles.

[6]:

# make a circular object with the null_samples for plotting

circ_null = Circular.from_multiple(

[Circular(samp, labels=[i]*len(samp)) for i, samp in enumerate(null_samples)]

)

circplot_null = CircPlot(circ_null)

circplot_null.add_circular_mean(color = "lightblue", alpha = 0.3)

circplot_null.add_arrows(

np.array([circ_target.mean()]),

np.array([circ_target.r()]),

color="darkblue"

)

plt.tight_layout()

We can see the lenght of the resultant vector for the target phase angles is larger than the means from the null distribution samples, indicating that the target phase angles are more aligned with a specific phase of the respiratory cycle than would be expected by chance as found using the permutation test.

Group-level analysis#

[7]:

circulars = {}

pas = {}

for file_path in data_path.iterdir():

subj_id = file_path.name.split("_")[1]

with open(file_path, 'rb') as f:

data = pickle.load(f)

circulars[subj_id] = data["circ"]

pas[subj_id] = data["phase_angles"]

[18]:

rng = np.random.default_rng(seed=42)

results = {}

for subj_id in circulars:

observed = circulars[subj_id]["target"]

pa = pas[subj_id]

# Run participant-level permutation test

obs_stat, pval, null_samples, obs_vs_null, null_vs_null = permutation_test_against_null(

observed=observed.data,

phase_pool=pa,

n_null=500,

n_permutations = 1000,

rng=rng,

verbose=True,

return_null_samples=True,

return_obs_and_null_stats=True

)

# per-participant summary stats (means of distributions)

circ_null_samples = Circular.from_multiple(

[Circular(samp, labels=[i]*len(samp)) for i, samp in enumerate(null_samples)]

)

results[subj_id] = {

"obs_vs_null": obs_vs_null,

"null_vs_null": null_vs_null,

"pval": pval,

"null_samples": circ_null_samples

}

group_obs_stat, group_pval, group_null = permutation_test_within_units(

data1=obs,

data2=null,

n_permutations=10000,

alternative="greater",

verbose=True,

return_null_distribution=True

)

p val: 0.000999000999000999, observed stat: 249810.0:.1f, mean null stat: 125066.6

p val: 0.000999000999000999, observed stat: 249920.0:.1f, mean null stat: 125203.2

p val: 0.000999000999000999, observed stat: 249770.0:.1f, mean null stat: 125136.1

p val: 0.000999000999000999, observed stat: 250000.0:.1f, mean null stat: 125038.5

p val: 0.000999000999000999, observed stat: 248606.0:.1f, mean null stat: 124890.6

p val: 0.000999000999000999, observed stat: 225591.5:.1f, mean null stat: 124602.8

p val: 0.000999000999000999, observed stat: 226204.0:.1f, mean null stat: 125087.8

p val: 0.000999000999000999, observed stat: 140420.0:.1f, mean null stat: 124964.3

p val: 0.000999000999000999, observed stat: 248125.0:.1f, mean null stat: 124955.3

p val: 0.000999000999000999, observed stat: 216524.5:.1f, mean null stat: 124820.3

p val: 0.000999000999000999, observed stat: 238950.0:.1f, mean null stat: 125128.8

p val: 0.000999000999000999, observed stat: 250000.0:.1f, mean null stat: 124999.6

p val: 0.000999000999000999, observed stat: 237233.0:.1f, mean null stat: 124911.3

p val: 0.00999000999000999, observed stat: 135171.0:.1f, mean null stat: 124731.5

p val: 0.000999000999000999, observed stat: 250000.0:.1f, mean null stat: 125243.4

p val: 0.000999000999000999, observed stat: 247731.5:.1f, mean null stat: 124837.3

p val: 0.6043956043956044, observed stat: 123545.5:.1f, mean null stat: 124782.6

p val: 0.000999000999000999, observed stat: 250000.0:.1f, mean null stat: 124988.9

p val: 0.000999000999000999, observed stat: 247057.5:.1f, mean null stat: 125064.6

p val: 0.000999000999000999, observed stat: 237802.0:.1f, mean null stat: 125051.5

[np.float64(0.2722847169046439), np.float64(0.27552870392390016), np.float64(0.2560652556480381), np.float64(0.8626961599630929), np.float64(0.17874625639152664), np.float64(0.049852065498420015), np.float64(0.06901338820301782), np.float64(0.0014888001025055704), np.float64(0.18286749440260536), np.float64(0.04982603878116345), np.float64(0.0734379138180817), np.float64(0.841530894446689), np.float64(0.07427198919032256), np.float64(-0.0019997454764218597), np.float64(1.4931233780276816), np.float64(0.15227483640690065), np.float64(-0.002095555555555559), np.float64(0.7326479731148919), np.float64(0.16736308673469386), np.float64(0.09064723554285843)]

Observed statistic: 0.2910

Observed statistic = 0.2910, p = 0.0001

[ ]:

from typing import Callable, Optional, Literal

from pyriodic.utils import calculate_p_value

from tqdm.auto import tqdm

def permutation_test_within_units(

data1: list[np.ndarray],

data2: list[np.ndarray],

stat_fun: Callable[[np.ndarray, np.ndarray], float] = lambda x, y: np.mean(x - y),

n_permutations: int = 5000,

rng: Optional[np.random.Generator] = None,

alternative: Literal["greater", "less", "two-sided"] = "two-sided",

verbose: bool = True,

return_null_distribution: bool = False

) -> tuple:

"""

Performs a paired permutation test, where data at index 1 in `data1` is assumed to be paired with data at index 1 in `data2`.

This is useful when each unit (e.g., participant, sensor, session) has paired

data across two conditions, but the number of observations per condition may vary.

Label permutations are performed within each unit to preserve intra-unit structure.

Parameters

----------

data1 : list of np.ndarray

List of arrays, where each array contains data for a unit (e.g., participant).

data2 : list of np.ndarray

List of arrays, where each array contains data for a unit (e.g., participant). The indices for participants (or some other pairing) must match `data1`.

stat_fun : Callable, default = lambda x, y: np.mean(x - y)

Function that computes a scalar test statistic for each pair of data arrays.

n_permutations : int, default = 1000

Number of permutations to generate the null distribution.

rng : np.random.Generator, optional

NumPy random number generator for reproducibility.

alternative : {"greater", "less", "two-sided"}, default = "two-sided"

The alternative hypothesis to test.

verbose : bool, default = True

If True, print the observed statistic and p-value.

return_null_distribution : bool, default = False

If True, return the full null distribution in the output.

Returns

-------

observed_stat : float

The observed group-level statistic (mean of unit-level stats).

p_value : float

P-value under the null distribution.

null_distribution : np.ndarray, optional

Returned if `return_null_distribution` is True.

"""

if rng is None:

rng = np.random.default_rng()

data1 = [np.asarray(d) for d in data1]

data2 = [np.asarray(d) for d in data2]

if len(data1) != len(data2):

raise ValueError("data1 and data2 must have the same length.")

# Compute observed unit-level stats and average

unit_stats = [stat_fun(d1, d2) for d1, d2 in zip(data1, data2)]

observed_stat = np.mean(unit_stats)

null_distribution = []

for i in tqdm(range(n_permutations), desc="Generating null distribution"):

permuted_stats = []

for d1, d2 in zip(data1, data2):

n1, n2 = len(d1), len(d2)

combined = np.concatenate([d1, d2])

permuted = rng.permutation(combined)

perm_d1 = permuted[:n1]

perm_d2 = permuted[n1:]

permuted_stats.append(stat_fun(perm_d1, perm_d2))

null_distribution.append(np.mean(permuted_stats))

null_distribution = np.array(null_distribution)

p_value = calculate_p_value(observed_stat, null_distribution, alternative)

if verbose:

print(f"Observed statistic = {observed_stat:.4f}, p = {p_value:.4f}")

if return_null_distribution:

return observed_stat, p_value, null_distribution

return observed_stat, p_value

null = [results[subj_id]["null_vs_null"] for subj_id in results]

obs = [results[subj_id]["obs_vs_null"] for subj_id in results]

group_obs_stat, group_pval, group_null = permutation_test_within_units(

data1=obs,

data2=null,

n_permutations=10000,

alternative="greater",

verbose=True,

return_null_distribution=True

)

[np.float64(0.2722847169046439), np.float64(0.27552870392390016), np.float64(0.2560652556480381), np.float64(0.8626961599630929), np.float64(0.17874625639152664), np.float64(0.049852065498420015), np.float64(0.06901338820301782), np.float64(0.0014888001025055704), np.float64(0.18286749440260536), np.float64(0.04982603878116345), np.float64(0.0734379138180817), np.float64(0.841530894446689), np.float64(0.07427198919032256), np.float64(-0.0019997454764218597), np.float64(1.4931233780276816), np.float64(0.15227483640690065), np.float64(-0.002095555555555559), np.float64(0.7326479731148919), np.float64(0.16736308673469386), np.float64(0.09064723554285843)]

Observed statistic: 0.2910

Observed statistic = 0.2910, p = 0.0001

Is this effect consistent across all participants?#

[ ]:

n_subjects = len(results)

n_cols = 4

n_rows = math.ceil(n_subjects / n_cols)

fig, axes = plt.subplots(

n_rows, n_cols,

dpi = 300,

figsize = (n_cols*3, n_rows*3),

subplot_kw={'projection': 'polar'},

sharex=True,

sharey=True

)

axes = axes.flatten()

for i, subj_id in enumerate(circulars):

tmp_result = results[subj_id]

circ_obs_target = circulars[subj_id]["target"]

circ_null = tmp_result["null_samples"]

ax = axes[i]

tmp_plot = CircPlot(circ_null, ax=ax)

tmp_plot.add_circular_mean(alpha = 0.1, color = "lightblue")

tmp_plot.add_arrows([circ_obs_target.mean()], [circ_obs_target.r()], label = "Observed", color = "darkblue")

ax.set_title(f"{subj_id}, pval = {tmp_result['pval'].round(6)}", fontsize=8)

# Hide unused axes

for j in range(i + 1, len(axes)):

axes[j].axis("off")

plt.tight_layout()